问题描述

- 场景1:积攒数据的size过大溢出

- 场景2:内存/显存溢出

AMCT在使用过程中会额外申请内存/显存的部分资源,在内存/显存资源紧张的情况下,会出现资源申请失败的情况,导致系统抛出资源申请失败的信息。

常见的系统资源申请失败的信息如下所示:

CPU运行环境下,内存申请失败的部分信息显示如下:

1MemoryError: Unable to allocate array with shape (1048576, 102400) and data type float32

GPU运行环境下,显存申请失败的部分信息显示如下:

1 2 3 4 5

ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape[1,1073741824] and type int32 on /job:localhost/replica:0/task:0/device:GPU:0 by allocator G [[node TopKV2_1 (defined at test_input_big_tensor.py:30) = TopKV2[T=DT_FLOAT, sorted=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](Reshape, Cast_1/_5)]] Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info. [[{{node QuantIfmr/_17}} = _Recv[client_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:arnation=1, tensor_name="edge_55_QuantIfmr", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]()]] Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.

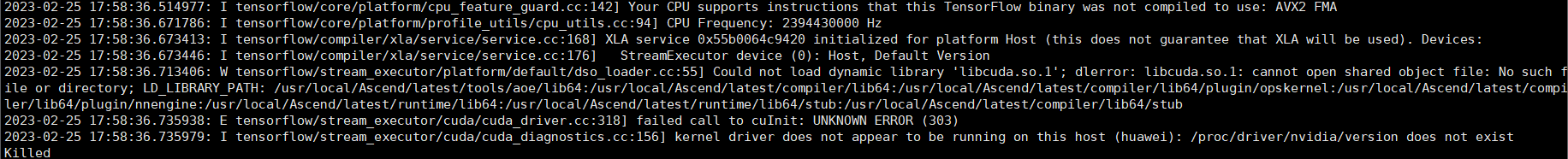

- 场景3:因量化进程占用内存过大,导致系统内存不足,出现Out of memory问题,进程被系统终止,出现类似下图的“killed”报错信息。

通过如下命令查看系统日志,有“oom-kill”报错信息。

vi /var/log/messages

系统日志报错如下:

1 2 3

169 huawei kerne: 0 678327 python3 170 Feb 27 03:30:38 huawei kernel: [2599806.621086] oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null) ,cpuset=/,mems_allowed=0-1,global_oom,task_memcg=/user.slice/user-0.slice/session-7474.scope,task=amct _tensorflow,pid=678157,uid=0 171 Feb 27 03:30:38 huawei kernel: [2599809.519800] oom_reaper: reaped process 678157 (amct_tensorflow), now anon-rss:0kB, file-rss:0kB, shmem-rss:0kB

解决方法

建议用户适当减小量化使用的batch_num,由于硬件资源的不同,减小batch_num之后仍可能发生资源申请失败情况,建议用户将量化选择的batch_num以及每batch的size继续调低,或者选用硬件资源更加充足的平台进行量化处理。

如果上述方案仍旧无法解决问题,则可以尝试使用HFMG算法解决,即参见训练后量化简易配置文件构造包含HFMG算法的简易配置文件,重新进行量化。