Normalize

功能说明

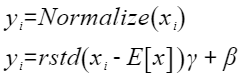

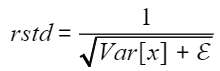

LayerNorm中,已知均值和方差,计算shape为[A,R]的输入数据的标准差的倒数rstd和y,其计算公式如下:

其中, 和

和 分别代表输入在R轴的均值,方差,γ为缩放系数,β为平移系数,ε为防除零的权重系数。

分别代表输入在R轴的均值,方差,γ为缩放系数,β为平移系数,ε为防除零的权重系数。

函数原型

- 通过sharedTmpBuffer入参传入临时空间

1 2

template < typename U, typename T, bool isReuseSource = false, const NormalizeConfig& config = NLCFG_NORM> __aicore__ inline void Normalize(const LocalTensor<T>& output, const LocalTensor<float>& outputRstd, const LocalTensor<float>& inputMean, const LocalTensor<float>& inputVariance, const LocalTensor<T>& inputX, const LocalTensor<U>& gamma, const LocalTensor<U>& beta, const LocalTensor<uint8_t>& sharedTmpBuffer, const float epsilon, const NormalizePara& para)

- 接口框架申请临时空间

1 2

template < typename U, typename T, bool isReuseSource = false, const NormalizeConfig& config = NLCFG_NORM> __aicore__ inline void Normalize(const LocalTensor<T>& output, const LocalTensor<float>& outputRstd, const LocalTensor<float>& inputMean, const LocalTensor<float>& inputVariance, const LocalTensor<T>& inputX, const LocalTensor<U>& gamma, const LocalTensor<U>& beta, const float epsilon, const NormalizePara& para)

由于该接口的内部实现中涉及复杂的计算,需要额外的临时空间来存储计算过程中的中间变量。临时空间支持接口框架申请和开发者通过sharedTmpBuffer入参传入两种方式。

- 接口框架申请临时空间,开发者无需申请,但是需要预留临时空间的大小。

- 通过sharedTmpBuffer入参传入,使用该tensor作为临时空间进行处理,接口框架不再申请。该方式开发者可以自行管理sharedTmpBuffer内存空间,并在接口调用完成后,复用该部分内存,内存不会反复申请释放,灵活性较高,内存利用率也较高。

接口框架申请的方式,开发者需要预留临时空间;通过sharedTmpBuffer传入的情况,开发者需要为tensor申请空间。临时空间大小BufferSize的获取方式如下:通过Normalize Tiling中提供的GetNormalizeMaxMinTmpSize接口获取所需最大和最小临时空间大小,最小空间可以保证功能正确,最大空间用于提升性能。

参数名 |

描述 |

||

|---|---|---|---|

U |

beta,gamma操作数的数据类型。 |

||

T |

inputX操作数的数据类型。 |

||

isReuseSource |

当前该参数为保留参数,默认值为false。 |

||

config |

配置Normalize接口中输入输出相关信息。NormalizeConfig类型,定义如下。

|

参数名称 |

输入/输出 |

含义 |

||

|---|---|---|---|---|

output |

输出 |

目的操作数,类型为LocalTensor,shape为[A, R],LocalTensor数据结构的定义请参考LocalTensor。 |

||

outputRstd |

输出 |

标准差的倒数,类型为LocalTensor,shape为[A],LocalTensor数据结构的定义请参考LocalTensor。 |

||

inputMean |

输入 |

均值,类型为LocalTensor,shape为[A],LocalTensor数据结构的定义请参考LocalTensor。 |

||

inputVariance |

输入 |

方差,类型为LocalTensor,shape为[A],LocalTensor数据结构的定义请参考LocalTensor。 |

||

inputX |

输入 |

源操作数,类型为LocalTensor,shape为[A, R],LocalTensor数据结构的定义请参考LocalTensor。inputX的数据类型需要与目的操作数保持一致,尾轴长度需要32B对齐。 |

||

gamma |

输入 |

缩放系数,类型为LocalTensor,shape为[R],LocalTensor数据结构的定义请参考LocalTensor。gamma的数据类型精度不低于源操作数的数据类型精度。 |

||

beta |

输入 |

平移系数,类型为LocalTensor,shape为[R],LocalTensor数据结构的定义请参考LocalTensor。beta的数据类型精度不低于源操作数的数据类型精度。 |

||

sharedTmpBuffer |

输入 |

共享缓冲区,用于存放API内部计算产生的临时数据。该方式开发者可以自行管理sharedTmpBuffer内存空间,并在接口调用完成后,复用该部分内存,内存不会反复申请释放,灵活性较高,内存利用率也较高。共享缓冲区大小的获取方式请参考Normalize Tiling。 类型为LocalTensor,支持的TPosition为VECIN/VECCALC/VECOUT。 |

||

epsilon |

输入 |

防除零的权重系数。 |

||

para |

输入 |

Normalize计算所需的参数信息。NormalizePara类型,定义如下。

|

返回值

无

支持的型号

注意事项

无

约束说明

- 操作数地址偏移对齐要求请参见通用约束。

- 缩放系数gamma和平移系数beta的数据类型精度必须不低于源操作数inputX的数据类型精度。比如,inputX的数据类型是half,gamma、beta的数据类型可以是half或者float,精度不低于inputX。

- src和dst的Tensor空间不可以复用。

- 输入仅支持ND格式。

- R轴不支持切分。

调用示例

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 | #include "kernel_operator.h" constexpr int32_t BUFFER_NUM = 1; // tensor num for each queue template <const AscendC::NormalizeConfig& CONFIG> class KernelNormalize { public: __aicore__ inline KernelNormalize() {} __aicore__ inline void Init(GM_ADDR x, GM_ADDR mean, GM_ADDR variance, GM_ADDR gamma, GM_ADDR beta, GM_ADDR rstd, GM_ADDR y, const float epsilon, const AscendC::NormalizePara& para) { this->meanRstdSize = (para.aLength + 7) / 8 * 8; // 此时进行32B对齐处理 // get start index for current core, core parallel xGm.SetGlobalBuffer((__gm__ DTYPE_X*)x, para.aLength * para.rLengthWithPadding); meanGm.SetGlobalBuffer((__gm__ float*)mean, this->meanRstdSize); varianceGm.SetGlobalBuffer((__gm__ float*)variance, this->meanRstdSize); gammaGm.SetGlobalBuffer((__gm__ DTYPE_GAMMA*)gamma, para.rLengthWithPadding); betaGm.SetGlobalBuffer((__gm__ DTYPE_BETA*)beta, para.rLengthWithPadding); rstdGm.SetGlobalBuffer((__gm__ float*)rstd, this->meanRstdSize); yGm.SetGlobalBuffer((__gm__ DTYPE_Y*)y, para.aLength * para.rLengthWithPadding); // pipe alloc memory to queue, the unit is Bytes pipe.InitBuffer(inQueueX, BUFFER_NUM, para.aLength * para.rLengthWithPadding * sizeof(DTYPE_X)); pipe.InitBuffer(inQueueMean, BUFFER_NUM, this->meanRstdSize * sizeof(float)); pipe.InitBuffer(inQueueVariance, BUFFER_NUM, this->meanRstdSize * sizeof(float)); pipe.InitBuffer(inQueueGamma, BUFFER_NUM, para.rLengthWithPadding * sizeof(DTYPE_GAMMA)); pipe.InitBuffer(inQueueBeta, BUFFER_NUM, para.rLengthWithPadding * sizeof(DTYPE_BETA)); pipe.InitBuffer(outQueueRstd, BUFFER_NUM, this->meanRstdSize * sizeof(float)); pipe.InitBuffer(outQueueY, BUFFER_NUM, para.aLength * para.rLengthWithPadding * sizeof(DTYPE_Y)); this->epsilon = epsilon; this->para = para; } __aicore__ inline void Compute() { AscendC::LocalTensor<DTYPE_X> xLocal = inQueueX.DeQue<DTYPE_X>(); AscendC::LocalTensor<float> meanLocal = inQueueMean.DeQue<float>(); AscendC::LocalTensor<float> varianceLocal = inQueueVariance.DeQue<float>(); AscendC::LocalTensor<DTYPE_GAMMA> gammaLocal = inQueueGamma.DeQue<DTYPE_GAMMA>(); AscendC::LocalTensor<DTYPE_BETA> betaLocal = inQueueBeta.DeQue<DTYPE_BETA>(); AscendC::LocalTensor<float> rstdLocal = outQueueRstd.AllocTensor<float>(); AscendC::LocalTensor<DTYPE_Y> yLocal = outQueueY.AllocTensor<DTYPE_Y>(); AscendC::Duplicate(rstdLocal, (float)0, this->meanRstdSize); AscendC::Duplicate(yLocal, (DTYPE_Y)0, para.aLength * para.rLengthWithPadding); AscendC::Normalize<DTYPE_Y, DTYPE_X, false, CONFIG>(yLocal, rstdLocal, meanLocal, varianceLocal, xLocal, gammaLocal, betaLocal, epsilon, para); outQueueRstd.EnQue<float>(rstdLocal); outQueueY.EnQue<DTYPE_Y>(yLocal); inQueueX.FreeTensor(xLocal); inQueueMean.FreeTensor(meanLocal); inQueueVariance.FreeTensor(varianceLocal); inQueueGamma.FreeTensor(gammaLocal); inQueueBeta.FreeTensor(betaLocal); } __aicore__ inline void Process() { CopyIn(); Compute(); CopyOut(); } private: __aicore__ inline void CopyIn() { // alloc tensor from queue memory AscendC::LocalTensor<DTYPE_X> xLocal = inQueueX.AllocTensor<DTYPE_X>(); AscendC::LocalTensor<float> meanLocal = inQueueMean.AllocTensor<float>(); AscendC::LocalTensor<float> varianceLocal = inQueueVariance.AllocTensor<float>(); AscendC::LocalTensor<DTYPE_GAMMA> gammaLocal = inQueueGamma.AllocTensor<DTYPE_GAMMA>(); AscendC::LocalTensor<DTYPE_BETA> betaLocal = inQueueBeta.AllocTensor<DTYPE_BETA>(); // copy progress_th tile from global tensor to local tensor AscendC::DataCopy(xLocal, xGm, para.aLength * para.rLengthWithPadding); AscendC::DataCopy(meanLocal, meanGm, this->meanRstdSize); AscendC::DataCopy(varianceLocal, varianceGm, this->meanRstdSize); AscendC::DataCopy(gammaLocal, gammaGm, para.rLengthWithPadding); AscendC::DataCopy(betaLocal, betaGm, para.rLengthWithPadding); // enque input tensors to VECIN queue inQueueX.EnQue(xLocal); inQueueMean.EnQue(meanLocal); inQueueVariance.EnQue(varianceLocal); inQueueGamma.EnQue(gammaLocal); inQueueBeta.EnQue(betaLocal); } __aicore__ inline void CopyOut() { // deque output tensor from VECOUT queue AscendC::LocalTensor<float> rstdLocal = outQueueRstd.DeQue<float>(); AscendC::LocalTensor<DTYPE_Y> yLocal = outQueueY.DeQue<DTYPE_Y>(); // copy progress_th tile from local tensor to global tensor AscendC::DataCopy(rstdGm, rstdLocal, this->meanRstdSize); AscendC::DataCopy(yGm, yLocal, para.aLength * para.rLengthWithPadding); // free output tensor for reuse outQueueRstd.FreeTensor(rstdLocal); outQueueY.FreeTensor(yLocal); } private: AscendC::TPipe pipe; // create queues for input, in this case depth is equal to buffer num AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueX; AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueMean; AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueVariance; AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueGamma; AscendC::TQue<AscendC::QuePosition::VECIN, BUFFER_NUM> inQueueBeta; // create queue for output, in this case depth is equal to buffer num AscendC::TQue<AscendC::QuePosition::VECOUT, BUFFER_NUM> outQueueRstd; AscendC::TQue<AscendC::QuePosition::VECOUT, BUFFER_NUM> outQueueY; AscendC::GlobalTensor<float> meanGm; AscendC::GlobalTensor<float> varianceGm; AscendC::GlobalTensor<DTYPE_X> xGm; AscendC::GlobalTensor<DTYPE_GAMMA> gammaGm; AscendC::GlobalTensor<DTYPE_BETA> betaGm; AscendC::GlobalTensor<float> rstdGm; AscendC::GlobalTensor<DTYPE_Y> yGm; float epsilon; uint32_t meanRstdSize; AscendC::NormalizePara para; }; __aicore__ constexpr AscendC::NormalizeConfig GenConfig(bool isNoBeta, bool isNoGamma) { return {.reducePattern = AscendC::ReducePattern::AR, .aLength = -1, .isNoBeta = isNoBeta, .isNoGamma = isNoGamma, .isOnlyOutput = false}; } // with beta and gamma constexpr AscendC::NormalizeConfig CONFIG1 = GenConfig(false, false); constexpr AscendC::NormalizeConfig CONFIG2 = GenConfig(false, true); constexpr AscendC::NormalizeConfig CONFIG3 = GenConfig(true, false); constexpr AscendC::NormalizeConfig CONFIG4 = GenConfig(true, true); extern "C" __global__ __aicore__ void normalize_custom(GM_ADDR x, GM_ADDR mean, GM_ADDR variance, GM_ADDR gamma, GM_ADDR beta, GM_ADDR rstd, GM_ADDR y, GM_ADDR workspace, GM_ADDR tiling) { GET_TILING_DATA(tilingData, tiling); float epsilon = tilingData.espilon; AscendC::NormalizePara para(tilingData.aLength, tilingData.rLength, tilingData.rLengthWithPadding); if (TILING_KEY_IS(1)) { if (!tilingData.isNoBeta && !tilingData.isNoGamma) { KernelNormalize<CONFIG1> op; op.Init(x, mean, variance, gamma, beta, rstd, y, epsilon, para); op.Process(); } else if (!tilingData.isNoBeta && tilingData.isNoGamma) { KernelNormalize<CONFIG2> op; op.Init(x, mean, variance, gamma, beta, rstd, y, epsilon, para); op.Process(); } else if (tilingData.isNoBeta && !tilingData.isNoGamma) { KernelNormalize<CONFIG3> op; op.Init(x, mean, variance, gamma, beta, rstd, y, epsilon, para); op.Process(); } else if (tilingData.isNoBeta && tilingData.isNoGamma) { KernelNormalize<CONFIG4> op; op.Init(x, mean, variance, gamma, beta, rstd, y, epsilon, para); op.Process(); } } } |