Sort

函数功能

排序函数,按照数值大小进行降序排序。一次迭代可以完成32个数的排序,数据需要按如下描述结构进行保存:

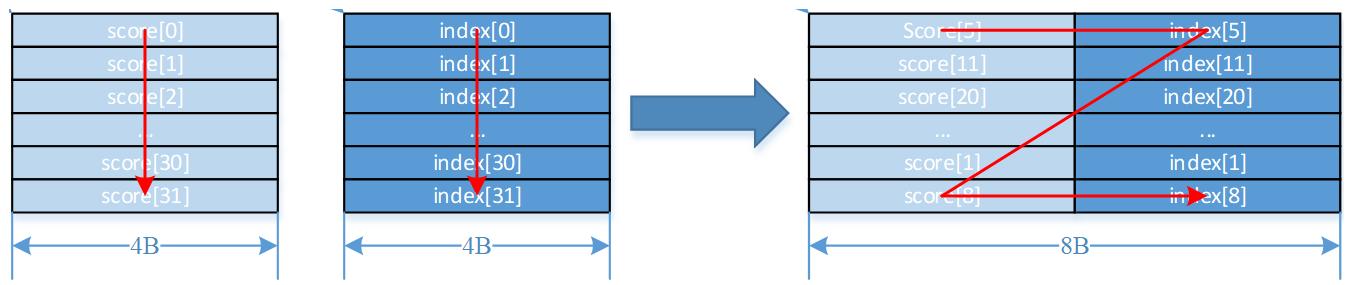

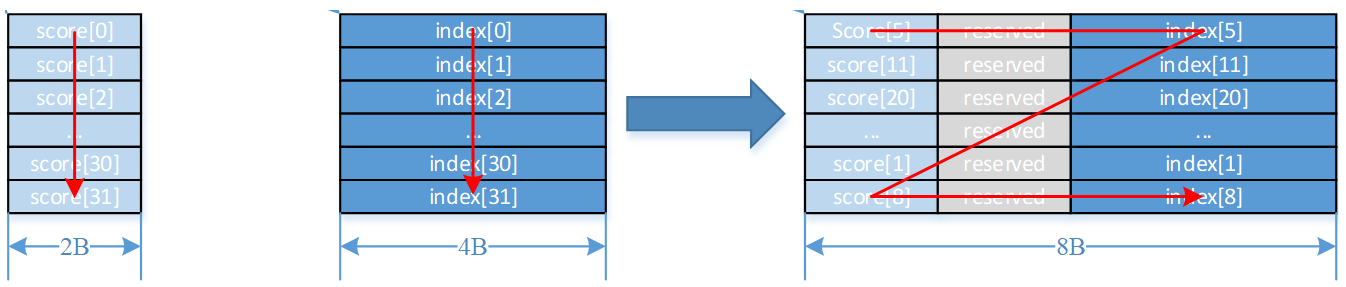

- Atlas A2训练系列产品/Atlas 800I A2推理产品:排序好的score与其对应的index一起以(score, index)的结构存储在 dstLocal中。不论 score 为 half 还是 float 类型,dstLocal 中的(score, index)结构总是占据8 Bytes空间。如下所示:

- Atlas 推理系列产品:输入输出数据均为Region Proposal,具体请参见ProposalConcat中的Region Proposal说明。

函数原型

template <typename T, bool isFullSort> __aicore__ inline void Sort(const LocalTensor<T> &dstLocal, const LocalTensor<T> &concatLocal, const LocalTensor<uint32_t> &indexLocal, LocalTensor<T> &tmpLocal, const int32_t repeatTimes)

参数说明

接口 |

功能 |

|---|---|

T |

操作数的数据类型。 |

isFullSort |

是否开启全排序模式。全排序模式指将全部输入降序排序,非全排序模式下,排序成每16个或32个有序,参考repeatTimes说明。 |

参数名称 |

输入/输出 |

含义 |

|---|---|---|

dstLocal |

输出 |

目的操作数。 类型为LocalTensor,支持的TPosition为VECIN/VECCALC/VECOUT。 Atlas A2训练系列产品/Atlas 800I A2推理产品,支持的数据类型为:half/float Atlas推理系列产品AI Core,支持的数据类型为:half/float |

concatLocal |

输入 |

源操作数。 类型为LocalTensor,支持的TPosition为VECIN/VECCALC/VECOUT。 此源操作数的数据类型需要与目的操作数保持一致。 Atlas A2训练系列产品/Atlas 800I A2推理产品,支持的数据类型为:half/float Atlas推理系列产品AI Core,支持的数据类型为:half/float |

indexLocal |

输入 |

源操作数。 类型为LocalTensor,支持的TPosition为VECIN/VECCALC/VECOUT。 此源操作数固定为uint32_t数据类型。 |

tmpLocal |

输入 |

临时空间。 类型为LocalTensor,支持的TPosition为VECIN/VECCALC/VECOUT。 此源操作数的数据类型需要与目的操作数保持一致。 Atlas A2训练系列产品/Atlas 800I A2推理产品,支持的数据类型为:half/float Atlas推理系列产品AI Core,支持的数据类型为:half/float |

repeatTimes |

输入 |

重复迭代次数,int32_t类型。

|

返回值

无

支持的型号

Atlas A2训练系列产品/Atlas 800I A2推理产品

Atlas推理系列产品AI Core

约束说明

- 当存在score[i]与score[j]相同时,如果i>j,则score[j]将首先被选出来,排在前面,即index的顺序与输入顺序一致。

- 非全排序模式下,每次迭代内的数据会进行排序,不同迭代间的数据不会进行排序。

- 操作数地址偏移对齐要求请参见通用约束。

调用示例

// Atlas A2训练系列产品/Atlas 800I A2推理产品样例,处理128个half类型数据 #include "kernel_operator.h" namespace AscendC { template <typename T> class FullSort { public: __aicore__ inline FullSort() {} __aicore__ inline void Init(__gm__ uint8_t* srcValueGm, __gm__ uint8_t* srcIndexGm, __gm__ uint8_t* dstValueGm, __gm__ uint8_t* dstIndexGm) { concatRepeatTimes = m_elementCount / 16; inBufferSize = m_elementCount * sizeof(uint32_t); outBufferSize = m_elementCount * sizeof(uint32_t); calcBufferSize = m_elementCount * 8; tmpBufferSize = m_elementCount * 8; sortedLocalSize = m_elementCount * 4; sortRepeatTimes = m_elementCount / 32; extractRepeatTimes = m_elementCount / 32; sortTmpLocalSize = m_elementCount * 4; m_valueGlobal.SetGlobalBuffer((__gm__ T*)srcValueGm); m_indexGlobal.SetGlobalBuffer((__gm__ uint32_t*)srcIndexGm); m_dstValueGlobal.SetGlobalBuffer((__gm__ T*)dstValueGm); m_dstIndexGlobal.SetGlobalBuffer((__gm__ uint32_t*)dstIndexGm); m_pipe.InitBuffer(m_queIn, 2, inBufferSize); m_pipe.InitBuffer(m_queOut, 2, outBufferSize); m_pipe.InitBuffer(m_queCalc, 1, calcBufferSize*sizeof(T)); m_pipe.InitBuffer(m_queTmp, 2, tmpBufferSize*sizeof(T)); } __aicore__ inline void Process() { CopyIn(); Compute(); CopyOut(); } private: __aicore__ inline void CopyIn() { LocalTensor<T> valueLocal = m_queIn.AllocTensor<T>(); DataCopy(valueLocal, m_valueGlobal, m_elementCount); m_queIn.EnQue(valueLocal); LocalTensor<uint32_t> indexLocal = m_queIn.AllocTensor<uint32_t>(); DataCopy(indexLocal, m_indexGlobal, m_elementCount); m_queIn.EnQue(indexLocal); } __aicore__ inline void Compute() { LocalTensor<T> valueLocal = m_queIn.DeQue<T>(); LocalTensor<uint32_t> indexLocal = m_queIn.DeQue<uint32_t>(); LocalTensor<T> sortedLocal = m_queCalc.AllocTensor<T>(); LocalTensor<T> concatTmpLocal = m_queTmp.AllocTensor<T>(); LocalTensor<T> sortTmpLocal = m_queTmp.AllocTensor<T>(); LocalTensor<T> dstValueLocal = m_queOut.AllocTensor<T>(); LocalTensor<uint32_t> dstIndexLocal = m_queOut.AllocTensor<uint32_t>(); LocalTensor<T> concatLocal; Concat(concatLocal, valueLocal, concatTmpLocal, concatRepeatTimes); Sort<T, true>(sortedLocal, concatLocal, indexLocal, sortTmpLocal, sortRepeatTimes); Extract(dstValueLocal, dstIndexLocal, sortedLocal, extractRepeatTimes); m_queTmp.FreeTensor(concatTmpLocal); m_queTmp.FreeTensor(sortTmpLocal); m_queIn.FreeTensor(valueLocal); m_queIn.FreeTensor(indexLocal); m_queCalc.FreeTensor(sortedLocal); m_queOut.EnQue(dstValueLocal); m_queOut.EnQue(dstIndexLocal); } __aicore__ inline void CopyOut() { LocalTensor<T> dstValueLocal = m_queOut.DeQue<T>(); LocalTensor<uint32_t> dstIndexLocal = m_queOut.DeQue<uint32_t>(); DataCopy(m_dstValueGlobal, dstValueLocal, m_elementCount); DataCopy(m_dstIndexGlobal, dstIndexLocal, m_elementCount); m_queOut.FreeTensor(dstValueLocal); m_queOut.FreeTensor(dstIndexLocal); } private: TPipe m_pipe; TQue<QuePosition::VECIN, 2> m_queIn; TQue<QuePosition::VECOUT, 2> m_queOut; TQue<QuePosition::VECIN, 2> m_queTmp; TQue<QuePosition::VECIN, 1> m_queCalc; GlobalTensor<T> m_valueGlobal; GlobalTensor<uint32_t> m_indexGlobal; GlobalTensor<T> m_dstValueGlobal; GlobalTensor<uint32_t> m_dstIndexGlobal; uint32_t m_elementCount = 128; uint32_t concatRepeatTimes; uint32_t inBufferSize; uint32_t outBufferSize; uint32_t calcBufferSize; uint32_t tmpBufferSize; uint32_t sortedLocalSize; uint32_t sortTmpLocalSize; uint32_t sortRepeatTimes; uint32_t extractRepeatTimes; }; // class FullSort } // namespace AscendC extern "C" __global__ __aicore__ void FullSort(__gm__ uint8_t* src0Gm, __gm__ uint8_t* src1Gm, __gm__ uint8_t* dst0Gm, __gm__ uint8_t* dst1Gm) { AscendC::FullSort<half> op; op.Init(src0Gm, src1Gm, dst0Gm, dst1Gm); op.Process(); } 示例结果 输入数据(srcValueGm): 128个float类型数据 [31 30 29 ... 2 1 0 63 62 61 ... 34 33 32 95 94 93 ... 66 65 64 127 126 125 ... 98 97 96] 输入数据(srcIndexGm): [31 30 29 ... 2 1 0 63 62 61 ... 34 33 32 95 94 93 ... 66 65 64 127 126 125 ... 98 97 96] 输出数据(dstValueGm): [127 126 125 ... 2 1 0] 输出数据(dstIndexGm): [127 126 125 ... 2 1 0] // 昇腾610 AI处理器AI Core/Atlas推理系列产品AI Core样例,处理64个half类型数据: #include "kernel_operator.h" namespace AscendC { template <typename T> class FullSort { public: __aicore__ inline FullSort() {} __aicore__ inline void Init(__gm__ uint8_t* srcValueGm, __gm__ uint8_t* srcIndexGm, __gm__ uint8_t* dstValueGm, __gm__ uint8_t* dstIndexGm) { concatRepeatTimes = m_elementCount / 16; inBufferSize = m_elementCount * sizeof(uint32_t); outBufferSize = m_elementCount * sizeof(uint32_t); calcBufferSize = m_elementCount * 8; tmpBufferSize = m_elementCount * 8; sortedLocalSize = m_elementCount * 8 * sizeof(T); sortRepeatTimes = m_elementCount / 16; extractRepeatTimes = m_elementCount / 16; sortTmpLocalSize = m_elementCount * 8 * sizeof(T); m_valueGlobal.SetGlobalBuffer((__gm__ T*)srcValueGm); m_indexGlobal.SetGlobalBuffer((__gm__ uint32_t*)srcIndexGm); m_dstValueGlobal.SetGlobalBuffer((__gm__ T*)dstValueGm); m_dstIndexGlobal.SetGlobalBuffer((__gm__ uint32_t*)dstIndexGm); m_pipe.InitBuffer(m_queIn, 2, inBufferSize); m_pipe.InitBuffer(m_queOut, 2, outBufferSize); m_pipe.InitBuffer(m_queCalc, 1, calcBufferSize*sizeof(T)); m_pipe.InitBuffer(m_queTmp, 2, tmpBufferSize*sizeof(T)); } __aicore__ inline void Process() { CopyIn(); Compute(); CopyOut(); } private: __aicore__ inline void CopyIn() { LocalTensor<T> valueLocal = m_queIn.AllocTensor<T>(); DataCopy(valueLocal, m_valueGlobal, m_elementCount); m_queIn.EnQue(valueLocal); LocalTensor<uint32_t> indexLocal = m_queIn.AllocTensor<uint32_t>(); DataCopy(indexLocal, m_indexGlobal, m_elementCount); m_queIn.EnQue(indexLocal); } __aicore__ inline void Compute() { LocalTensor<T> valueLocal = m_queIn.DeQue<T>(); LocalTensor<uint32_t> indexLocal = m_queIn.DeQue<uint32_t>(); LocalTensor<T> sortedLocal = m_queCalc.AllocTensor<T>(); LocalTensor<T> concatTmpLocal = m_queTmp.AllocTensor<T>(); LocalTensor<T> sortTmpLocal = m_queTmp.AllocTensor<T>(); LocalTensor<T> dstValueLocal = m_queOut.AllocTensor<T>(); LocalTensor<uint32_t> dstIndexLocal = m_queOut.AllocTensor<uint32_t>(); LocalTensor<T> concatLocal; Concat(concatLocal, valueLocal, concatTmpLocal, concatRepeatTimes); Sort<T, true>(sortedLocal, concatLocal, indexLocal, sortTmpLocal, sortRepeatTimes); Extract(dstValueLocal, dstIndexLocal, sortedLocal, extractRepeatTimes); m_queTmp.FreeTensor(concatTmpLocal); m_queTmp.FreeTensor(sortTmpLocal); m_queIn.FreeTensor(valueLocal); m_queIn.FreeTensor(indexLocal); m_queCalc.FreeTensor(sortedLocal); m_queOut.EnQue(dstValueLocal); m_queOut.EnQue(dstIndexLocal); } __aicore__ inline void CopyOut() { LocalTensor<T> dstValueLocal = m_queOut.DeQue<T>(); LocalTensor<uint32_t> dstIndexLocal = m_queOut.DeQue<uint32_t>(); DataCopy(m_dstValueGlobal, dstValueLocal, m_elementCount); DataCopy(m_dstIndexGlobal, dstIndexLocal, m_elementCount); m_queOut.FreeTensor(dstValueLocal); m_queOut.FreeTensor(dstIndexLocal); } private: TPipe m_pipe; TQue<QuePosition::VECIN, 2> m_queIn; TQue<QuePosition::VECOUT, 2> m_queOut; TQue<QuePosition::VECIN, 2> m_queTmp; TQue<QuePosition::VECIN, 1> m_queCalc; GlobalTensor<T> m_valueGlobal; GlobalTensor<uint32_t> m_indexGlobal; GlobalTensor<T> m_dstValueGlobal; GlobalTensor<uint32_t> m_dstIndexGlobal; uint32_t m_elementCount = 64; uint32_t concatRepeatTimes; uint32_t inBufferSize; uint32_t outBufferSize; uint32_t calcBufferSize; uint32_t tmpBufferSize; uint32_t sortedLocalSize; uint32_t sortTmpLocalSize; uint32_t sortRepeatTimes; uint32_t extractRepeatTimes; }; // class FullSort } // namespace AscendC extern "C" __global__ __aicore__ void FullSort(__gm__ uint8_t* src0Gm, __gm__ uint8_t* src1Gm, __gm__ uint8_t* dst0Gm, __gm__ uint8_t* dst1Gm) { AscendC::FullSort<half> op; op.Init(src0Gm, src1Gm, dst0Gm, dst1Gm); op.Process(); } 示例结果 输入数据(srcValueGm): 128个float类型数据 [15 14 13 ... 2 1 0 31 30 29 ... 18 17 16 47 46 45 ... 34 33 32 63 62 61 ... 50 49 48] 输入数据(srcIndexGm): [15 14 13 ... 2 1 0 31 30 29 ... 18 17 16 47 46 45 ... 34 33 32 63 62 61 ... 50 49 48] 输出数据(dstValueGm): [63 62 61 ... 2 1 0] 输出数据(dstIndexGm): [63 62 61 ... 2 1 0]